Palaces in the Cloud

On memory, machines, and the forgotten art of medieval mnemonics

In 1532, an Italian scholar named Giulio Camillo unveiled a curious attraction in a Venetian square: the Theater of Memory. No one knows exactly what it looked like—no drawings survive—but visitors at the time described it as a wooden chamber lined with drawers and shuttered windows, each inscribed with symbols and bits of writing.

Open the right sequence of panels, Camillo promised, and the wisdom of the ancients would be revealed: the teachings of Solomon, the movements of celestial bodies, the seven virtues and vices, and more. It was, he claimed, “a constructed mind and soul.”

Like so many promising new inventions, the theater sparked a wave of public excitement, breathless commentary among the literati, and even attracted speculative investments—before failing to deliver on its promise, and bankrupting its inventor.

Today, we live in our own age of memory palaces: AI systems promising instant access to humanity’s collective wisdom and insight. Artificial General Intelligence (AGI), we are told, is right around the corner. And yet, as with Camillo’s theater, much depends on a kind of mnemonic sleight-of-hand.

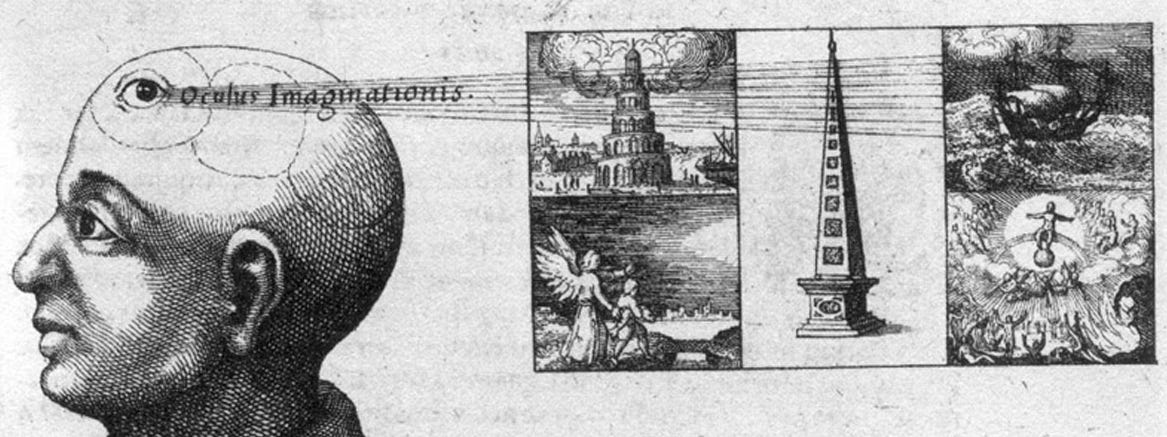

In truth, Camillo’s theater was a simplified version of a much older tradition: the ars memoriae (“art of memory”). For centuries, European monks trained in this demanding practice, visualizing elaborate palaces filled with symbolic imagery. One practitioner boasted that he could recall every known fact across an astonishing range of topics: theology, astronomy, metaphysics, law, arithmetic, music, geometry, logic, and grammar. Another claimed to have memorized two hundred classical speeches, three hundred philosophical sayings, twenty thousand points of legal doctrine, and more. It took years of training—and austere mental discipline—to master the technique.

Camillo, a former monk, promised the public a shortcut: access to a vast storehouse of recorded knowledge, without all the troublesome mind training. Anyone who entered his theater, he boasted, would emerge newly possessed of the wisdom of Cicero.

When he unveiled a prototype version of the theater in Venice, the public raved. Spectators lined up to pay and enter. Word of the spectacle even reached the King of France, who took a personal interest in the project and promised to fund its continued development to the tune of five hundred ducats (a princely sum —the Renaissance equivalent of startup capital).

And then, as with so many disruptive technologies, the bubble burst. The project went over budget; production delays added up; the project languished. Camillo found himself plunged into debt. He died just a few years later, with his theater still unfinished—but nonetheless convinced that he had seen a glimpse of the future.

In a sense, he had.

Five centuries later, that dream has migrated to the cloud. Today’s large language models (LLMs) compress vast corpora of text—humanity’s collective intelligence—via natural language processing (NLP) and neural networks. They do more than just record facts; they create a semantic architecture of recall and retrieval. They are, to borrow Camillo’s phrase, “a constructed mind.”

LLMs are often described as if they possess knowledge, but what they really contain is structure—a vast lattice of associative trails. They organize words, images, and concepts not into imaginary corridors and arches, but across thousands of mathematical dimensions—latent spaces where proximity signals likeness, and the traversal produces the illusion of thought. In this sense, they bear at least a surface resemblance to the memory palaces of old.

Yet that resemblance conceals a fundamental inversion. The art of memory depended on an act of embodied imagination: to remember was to re-enact knowledge in a visualized space, to locate it within one’s own moral and sensory world. An LLM’s “memory,” by contrast, is disembodied and mechanistic. It remembers nothing. It merely reproduces statistical associations. It runs on ghosts in the machine.

The astonishing rise of AI has prompted endless waves of hype, as well as occasional bouts of fervid hand-wringing. Nicholas Carr warns that endless streams of connected data not only change what we know, but how we know, as we all become “engaged in the production of a facsimile of the world.” In a similar vein, Paul Kingsnorth suggests these systems do more than extend our minds; they reorganize our inner lives. “The Machine we are building is taking us at warp speed into a new way of being human,” he writes. “Away from the spiritual, towards the material; away from nature, towards technology; away from organic culture towards a planned technocracy.”

One can easily imagine sixteenth-century monastics lobbing similar critiques at Camillo’s degraded version of their sacred discipline.

For the medieval scholar, the art of memory amounted to a deeply spiritual practice. To remember was to practice discernment—to decide what was worth remembering and what could safely be forgotten. The memory palace was never meant to grant access to universal knowledge, but rather to act as a kind of mnemonic scaffolding to help practitioners cultivate their own inner wisdom.

Where the medieval monk sought inner moral virtue through recollection, the LLM optimizes for scale. It has no moral compass, but it has a facsimile of one in the form of embedded prompts geared towards implementing corporate policies, regulatory guidelines, and ethical guardrails. The choice of what gets remembered and what gets forgotten is no longer a personal decision, in other words; the machine’s performative morality is a distributed function, a disembodied specter of rules floating in the cloud.

The architecture of a medieval memory palace expressed a certain, highly individualized worldview — a way of distinguishing what mattered from what could be forgotten. But LLMs know no such restraint. They proceed from the premise that nothing should be forgotten, and that wisdom will arise not from individual contemplation but through an emergent, machine-augmented process.

But access to machines with perfect recall can foster a kind of collective amnesia. Models trained on vast bodies of data can threaten to blur the boundary between signal and noise, collapsing the distinctions—moral, emotional, mnemonic—that give human memories their purpose. Picture a Borgesian palace whose rooms keep dissolving into one another, their corridors looping endlessly through recursive chambers that echo without end.

The result is a paradox: a civilization that has forgotten the art of forgetting. We have built engines of total remembrance, yet our capacity for inner reflection and discernment risks getting hollowed out. Camillo’s theater promised wisdom through an externalized memory aid; our neural palaces promise something similar, but risk drowning out our inner wisdom in gestures of recall without the deeper, meaning-making faculties of comprehension and contemplation.

And yet, in their emptiness, these neural cloud palaces achieve a kind of tragic magnificence. They embody the ancient dream of universal knowledge. Like Borges’s Funes, they recall everything and understand almost nothing. Each prompt we type is a footstep through corridors that no longer require us to walk, corridors that extend endlessly through the cloud—infinitely precise, infinitely forgetful.

For centuries, the art of memory represented a deeply human endeavor: a fusion of imagination, spatial reasoning, and moral philosophy. Now AI performs mnemonic labor once reserved for monks, poets, and scholars. What we gain in speed and scale, we risk losing in attention and interpretation — trading embodied remembrance for probabilistic recall.

All that said, LLMs surely have their uses. They can help us sift through vast research collections, identify hidden patterns, and shed new light on the way knowledge accretes over time. They can provide all kinds of useful scaffolding—if we use them with intention.

Nowhere is it written that technology must flatten our inner lives. Like many powerful new inventions, these systems carry a double-edged promise: they can free us for deeper thought, or tempt us into the purgatory of passive retrieval.

And what of Giulio Camillo? For all his clever feats of mnemonic carpentry and bold proclamations, the theater never delivered the promised results. No one ever attained the wisdom of Cicero, except for Cicero himself.

Today, we are building the palaces of Camillo’s dreams — only exponentially larger, faster, and distributed everywhere all at once. The question, as ever, is not so much what the machine can do for us, but what we choose to do with it—and why.

p.s. If you’d like to learn more about the legacy of the ars memoria, allow me to recommend Frances Yates’s classic 1966 book, The Art of Memory.

Interesting piece. Always helpful to see some historical context. However, I will emphasize the point you ended with. It's up to us. Technology has value, it doesn't have values. You make the point that LLMs don't have a moral compass. I would disagree with that. Some will and do have a moral compass, as to your reference to ethical policies. Some absolutely will not. But then again, as is always the case with technology, whether we use it for evil or good is up to us.

Great think piece, Alex. Several years ago I read the Joshua Foer book “Moonwalking with Einstein”, and was so fascinated by the concept of the memory palace. I like the way you connected and contradicted that with the current AI trajectory. Lots to chew on.